Gzip compression works by reducing the size of web content through a process of compressing files before they are sent over the network. This compression technique looks for repeated patterns in the data and replaces them with shorter symbols, resulting in a smaller file size. By compressing text-based files like HTML, CSS, and JavaScript, Gzip helps to decrease the amount of data that needs to be transferred, ultimately improving website loading times.

The main difference between lossless and lossy compression techniques for web content lies in the way they handle data. Lossless compression preserves all the original data when compressing a file, ensuring that the exact original file can be reconstructed upon decompression. On the other hand, lossy compression techniques sacrifice some data to achieve higher compression ratios, which can result in a slight loss of quality, especially in multimedia files like images and videos.

Multi-dwelling unit (MDU) residents no longer just expect a roof over their heads; they demand a reliable connected existence. Connectivity is key. The internet isnot only an indispensable utility, but one that MDU residents expect property owners to provide. This post explores why a reliable internet service is crucial for property management and the potential consequences of dead spots, slow speeds, and internet downtime.

Posted by on 2024-02-07

Greetings from the technical forefront of Dojo Networks, your community’s internet service provider. In this article, we embark on a technical journey to explore the intricacies of WiFi connectivity within your apartment complex. As WiFi ninjas, we'll delve into the advanced mechanisms and protocols underpinning our managed network, detail the disruptive influence caused by personal routers, and explain why a unified approach from all residents is essential for ensuring optimal internet performance.

Posted by on 2024-01-18

It’s in our DNA. It made us who we are. DojoNetworks got its start more than 20 years ago as an internet company selling retail direct to MDU residents. We sold against the big carriers… one customer at a time. To win over–and retain–customers who assumed the cable company was their only option, we had to provide better value and better service. No other service provider in our industry, no one, has this amount of direct-to-customer experience or success. The carriers were used to being the only game in town, and the other MSPs all started with bulk, knowing they had a captive audience. A few MSPs are just now starting to offer opt-in service and have a year or two of experience.

Posted by on 2023-10-30

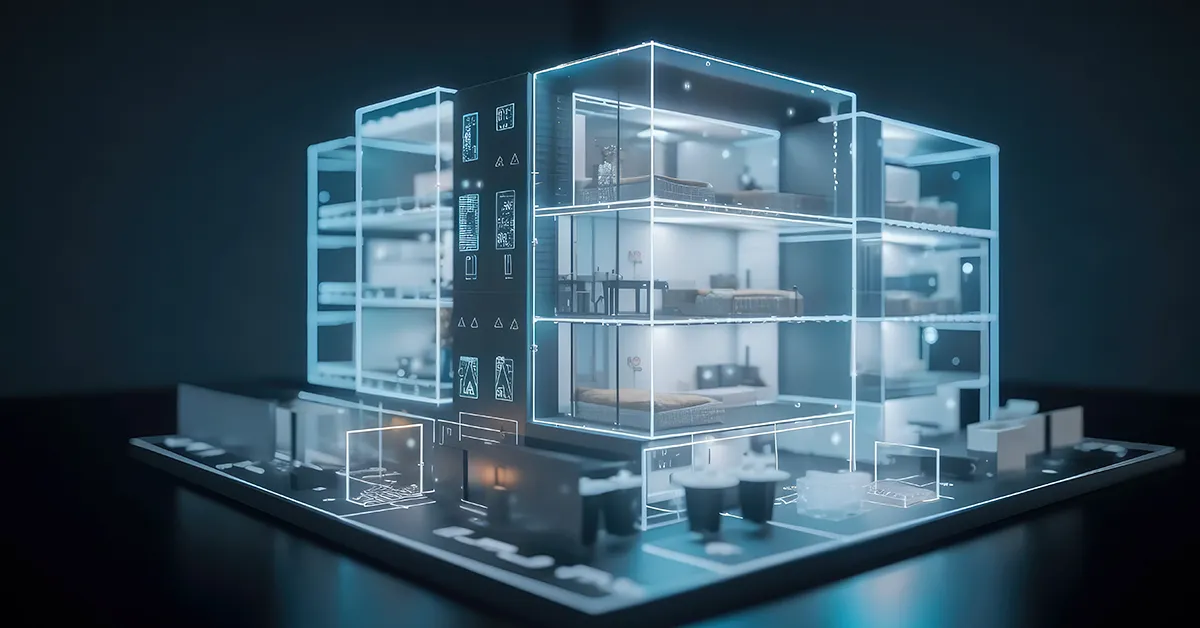

Smart apartment buildings, equipped with cutting-edge technology and automation systems, are becoming the new standard in property management. In this comprehensive guide, we will explore the concept of smart apartment buildings, the benefits they offer to owners and tenants, how to build or upgrade to one, the key features and technologies involved, and the steps to plan and implement a smart apartment building strategy.

Posted by on 2023-09-25

For students and other multi-tenant property residents, high-speed internet service is no longer a luxury. It’s a necessity. Internet access is commonly referred to as the “fourth utility” and is viewed by many to be THE MOST IMPORTANT UTILITY™.

Posted by on 2023-07-20

The Brotli compression algorithm enhances web content loading speed by offering better compression ratios compared to Gzip. Brotli uses a more sophisticated data format and compression techniques to achieve higher levels of compression, resulting in smaller file sizes. This reduction in file size leads to faster loading times for web pages, as less data needs to be transferred over the network.

Content delivery networks (CDNs) offer several benefits for compressed web content, including faster delivery speeds and improved performance. By storing cached copies of compressed files on servers located closer to the end-users, CDNs reduce latency and improve loading times. Additionally, CDNs can automatically compress files using techniques like Gzip or Brotli, further optimizing web content delivery.

Image optimization plays a crucial role in web content compression by reducing the file size of images without compromising quality. Techniques like resizing, compressing, and converting images to more efficient formats help to decrease the overall size of web pages. By optimizing images, web developers can significantly reduce loading times and improve the user experience on their websites.

The HTTP/2 protocol enhances web content compression by introducing features like header compression and multiplexing. With HTTP/2, multiple requests can be sent and received in parallel over a single connection, reducing latency and improving loading times. Additionally, HTTP/2 supports the use of compression algorithms like Brotli, further optimizing the transfer of web content between servers and clients.

Pre-compression techniques like minification and concatenation can help in reducing web content size by eliminating unnecessary characters and combining multiple files into a single one. Minification removes comments, whitespace, and other redundant elements from code files, reducing their size. Concatenation combines multiple files into one, reducing the number of HTTP requests needed to load a webpage. By implementing these techniques, web developers can significantly decrease the size of their web content and improve loading times.

Web cache servers play a crucial role in enhancing the efficiency of bulk internet technologies by storing frequently accessed web content closer to the end-users, reducing latency and improving overall performance. By utilizing caching mechanisms such as content delivery networks (CDNs) and proxy servers, web cache servers can quickly retrieve and deliver requested data, reducing the need for repeated requests to origin servers. This not only speeds up the loading times of web pages but also helps in optimizing bandwidth usage and reducing server load. Additionally, web cache servers can also help in mitigating distributed denial-of-service (DDoS) attacks by absorbing and filtering malicious traffic before it reaches the origin server. Overall, the use of web cache servers significantly improves the user experience and efficiency of bulk internet technologies.

TCP congestion control in bulk internet technologies is optimized through various mechanisms such as slow start, congestion avoidance, fast retransmit, and fast recovery. These algorithms work together to regulate the flow of data packets, ensuring efficient utilization of network resources and preventing network congestion. Additionally, technologies like Explicit Congestion Notification (ECN) and Random Early Detection (RED) are employed to provide feedback to the sender about network congestion levels, allowing for proactive adjustments in data transmission rates. By dynamically adjusting the window size and retransmission behavior based on network conditions, TCP congestion control in bulk internet technologies can effectively manage traffic flow and maintain optimal performance levels. Other optimizations include the use of algorithms like TCP Vegas, Compound TCP, and TCP Cubic, which further enhance the congestion control mechanisms to accommodate varying network conditions and traffic patterns. Overall, these optimizations play a crucial role in ensuring reliable and efficient data transmission in bulk internet technologies.

Various tools are available for network monitoring and analysis in bulk internet technologies, including Wireshark, SolarWinds Network Performance Monitor, Nagios, PRTG Network Monitor, and Zabbix. These tools allow network administrators to monitor network traffic, analyze performance metrics, detect anomalies, and troubleshoot issues in real-time. Additionally, they provide detailed reports, alerts, and visualizations to help optimize network performance and ensure smooth operation. By utilizing these tools, organizations can proactively manage their networks, identify potential security threats, and improve overall network efficiency.

Web acceleration in bulk internet technologies utilizes various techniques to improve loading speeds and overall performance. Some common methods include content delivery networks (CDNs), caching, image optimization, minification of code, lazy loading, prefetching, and server-side optimizations. CDNs help distribute content across multiple servers geographically closer to users, reducing latency. Caching stores frequently accessed data locally to reduce the need for repeated requests to the server. Image optimization involves compressing images without compromising quality to decrease file sizes. Minification of code removes unnecessary characters and spaces to reduce file sizes and improve load times. Lazy loading delays the loading of non-essential content until it is needed, while prefetching anticipates user actions to load resources in advance. Server-side optimizations involve configuring servers for faster response times and efficient data processing. By implementing these techniques, web acceleration can significantly enhance the user experience and optimize website performance in bulk internet technologies.

Bulk internet technologies continuously monitor and analyze emerging internet standards and protocols to ensure seamless integration and compatibility with evolving trends. These technologies leverage advanced algorithms and machine learning capabilities to adapt to changes in protocols such as HTTP, TCP/IP, DNS, and IPv6. By staying abreast of developments in areas like cybersecurity, cloud computing, and IoT, bulk internet technologies can proactively adjust their infrastructure to meet the demands of the ever-changing digital landscape. Additionally, these technologies collaborate with industry experts, participate in standardization bodies, and conduct regular audits to guarantee compliance with the latest protocols and standards. Through these proactive measures, bulk internet technologies can effectively navigate the complexities of the evolving internet ecosystem.