Dijkstra's algorithm is a popular method used in network optimization to find the shortest path between nodes in a graph. It works by iteratively selecting the node with the lowest cost from a set of unvisited nodes, updating the costs of neighboring nodes, and repeating this process until all nodes have been visited. This algorithm is efficient for finding optimal routes in networks with non-negative edge weights, making it a valuable tool for optimizing network performance.

Centralized network optimization algorithms involve a single entity making decisions for the entire network, while distributed algorithms allow individual nodes to make decisions based on local information. The main difference lies in the level of coordination and communication required between nodes. Centralized algorithms may be more efficient in certain scenarios but can be less scalable, whereas distributed algorithms can adapt to changes in the network more easily but may require more communication overhead.

Multi-dwelling unit (MDU) residents no longer just expect a roof over their heads; they demand a reliable connected existence. Connectivity is key. The internet isnot only an indispensable utility, but one that MDU residents expect property owners to provide. This post explores why a reliable internet service is crucial for property management and the potential consequences of dead spots, slow speeds, and internet downtime.

Posted by on 2024-02-07

Greetings from the technical forefront of Dojo Networks, your community’s internet service provider. In this article, we embark on a technical journey to explore the intricacies of WiFi connectivity within your apartment complex. As WiFi ninjas, we'll delve into the advanced mechanisms and protocols underpinning our managed network, detail the disruptive influence caused by personal routers, and explain why a unified approach from all residents is essential for ensuring optimal internet performance.

Posted by on 2024-01-18

It’s in our DNA. It made us who we are. DojoNetworks got its start more than 20 years ago as an internet company selling retail direct to MDU residents. We sold against the big carriers… one customer at a time. To win over–and retain–customers who assumed the cable company was their only option, we had to provide better value and better service. No other service provider in our industry, no one, has this amount of direct-to-customer experience or success. The carriers were used to being the only game in town, and the other MSPs all started with bulk, knowing they had a captive audience. A few MSPs are just now starting to offer opt-in service and have a year or two of experience.

Posted by on 2023-10-30

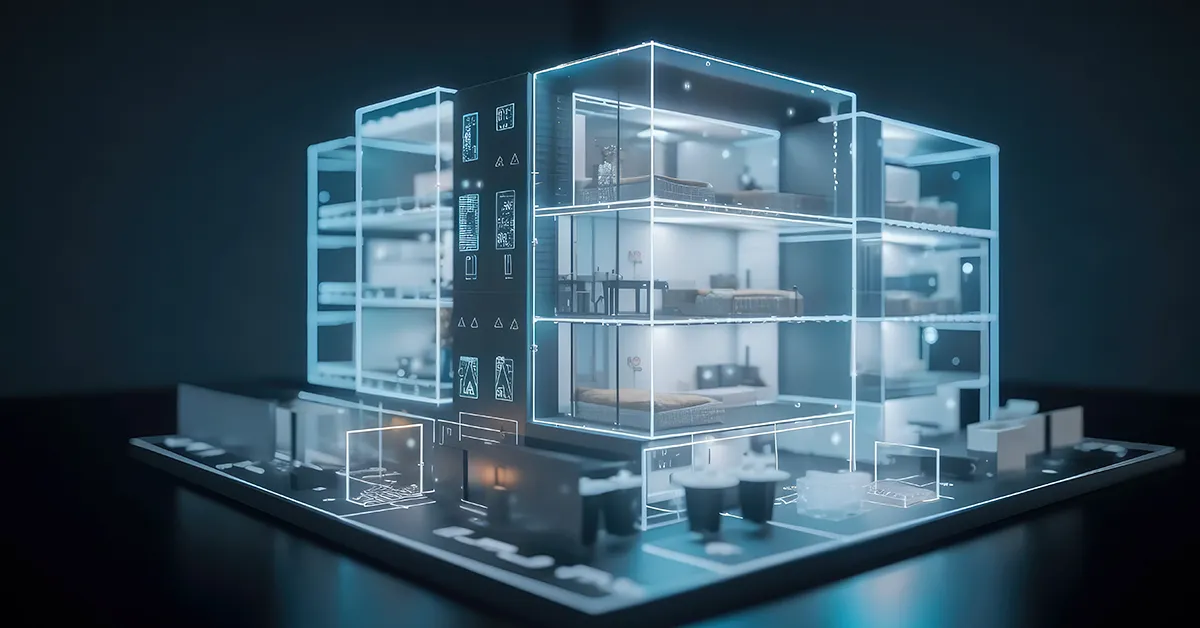

Smart apartment buildings, equipped with cutting-edge technology and automation systems, are becoming the new standard in property management. In this comprehensive guide, we will explore the concept of smart apartment buildings, the benefits they offer to owners and tenants, how to build or upgrade to one, the key features and technologies involved, and the steps to plan and implement a smart apartment building strategy.

Posted by on 2023-09-25

For students and other multi-tenant property residents, high-speed internet service is no longer a luxury. It’s a necessity. Internet access is commonly referred to as the “fourth utility” and is viewed by many to be THE MOST IMPORTANT UTILITY™.

Posted by on 2023-07-20

Genetic algorithms can indeed be used for network optimization by mimicking the process of natural selection to find optimal solutions. These algorithms generate a population of potential solutions, evaluate their fitness, and then apply genetic operators such as mutation and crossover to evolve better solutions over multiple generations. While genetic algorithms may take longer to converge compared to traditional algorithms, they have the advantage of exploring a wider range of solutions and can potentially find better global optima.

Simulated annealing algorithms contribute to improving network optimization solutions by simulating the process of annealing in metallurgy. These algorithms start with an initial solution and iteratively explore neighboring solutions, accepting worse solutions with a certain probability to escape local optima. By gradually decreasing the acceptance probability over time, simulated annealing algorithms can effectively explore the solution space and find better network optimization solutions.

Ant colony optimization algorithms play a crucial role in optimizing network routing and resource allocation by mimicking the foraging behavior of ants. These algorithms use pheromone trails to communicate information about good paths and dynamically update these trails based on the quality of solutions found. By leveraging the collective intelligence of the ant colony, these algorithms can efficiently find near-optimal solutions for complex network optimization problems.

Machine learning algorithms can be applied to network optimization problems by using historical data to train models that can predict network behavior and optimize performance. By leveraging techniques such as supervised learning, reinforcement learning, and deep learning, machine learning algorithms can adapt to changing network conditions and make real-time decisions to improve efficiency, reliability, and scalability. The potential benefits include faster decision-making, reduced downtime, and improved resource utilization.

The key challenges in implementing swarm intelligence algorithms for network optimization lie in balancing exploration and exploitation, handling dynamic network conditions, and scaling to large networks. To address these challenges, researchers are exploring hybrid approaches that combine swarm intelligence with other optimization techniques, developing adaptive algorithms that can adjust parameters based on network feedback, and leveraging parallel computing to handle the computational complexity of large-scale networks. By addressing these challenges, swarm intelligence algorithms can offer innovative solutions for optimizing network performance.

When implementing hybrid cloud content distribution in bulk internet technologies, several considerations must be taken into account. These include factors such as network bandwidth, data security, scalability, latency, and cost-effectiveness. It is important to ensure that the hybrid cloud infrastructure can efficiently handle the distribution of large amounts of content across different networks while maintaining high levels of security to protect sensitive data. Scalability is also crucial to accommodate fluctuations in demand and ensure optimal performance. Additionally, minimizing latency is essential to provide a seamless user experience. Finally, cost-effectiveness should be a priority to maximize the benefits of hybrid cloud content distribution. By carefully addressing these considerations, organizations can effectively leverage hybrid cloud technologies for bulk internet content distribution.

The Internet Cache Protocol (ICP) plays a crucial role in bulk internet technologies by facilitating the efficient sharing of cached web content between proxy servers. By utilizing ICP, proxy servers can quickly determine whether a requested web page is already stored in a nearby cache, reducing the need to retrieve the content from the original server. This helps to improve overall network performance, decrease latency, and minimize bandwidth usage. Additionally, ICP enables the creation of hierarchical cache systems, where multiple proxy servers can collaborate to optimize content delivery. Overall, ICP enhances the scalability and reliability of internet caching systems, making them essential components of modern internet infrastructure.

Mitigating DDoS attacks in bulk internet technologies can be achieved through a variety of strategies. Implementing rate limiting, traffic filtering, and access control lists can help to reduce the impact of such attacks. Utilizing a content delivery network (CDN) can also distribute traffic across multiple servers, making it more difficult for attackers to overwhelm a single target. Additionally, deploying intrusion detection and prevention systems (IDPS) can help to identify and block malicious traffic in real-time. Regularly updating and patching software and systems can also help to prevent vulnerabilities that attackers may exploit. Collaborating with internet service providers (ISPs) and utilizing cloud-based DDoS protection services can provide additional layers of defense against these types of attacks. By employing a combination of these strategies, organizations can better protect their networks and mitigate the risk of DDoS attacks in bulk internet technologies.

Reverse proxy servers offer numerous benefits in bulk internet technologies. By utilizing reverse proxies, companies can improve security by hiding the origin server's IP address and protecting it from potential cyber attacks. Additionally, reverse proxies can enhance performance by caching frequently accessed content and reducing the load on the origin server. This can lead to faster response times and improved user experience. Furthermore, reverse proxies can help with scalability by distributing incoming traffic across multiple servers, ensuring that the network can handle high volumes of requests. Overall, the use of reverse proxy servers in bulk internet technologies can result in increased security, improved performance, and enhanced scalability for businesses.

Global server load balancing (GSLB) enhances the scalability of bulk internet technologies by distributing incoming traffic across multiple servers based on various factors such as server health, geographic location, and server load. By utilizing GSLB, organizations can ensure that their resources are efficiently utilized, preventing any single server from becoming overwhelmed with traffic. This results in improved performance, reduced latency, and increased reliability for users accessing bulk internet technologies. Additionally, GSLB allows for seamless failover in case of server failures, ensuring continuous availability of services. Overall, GSLB plays a crucial role in optimizing the scalability of bulk internet technologies by intelligently managing traffic distribution across servers.

Bandwidth throttling mechanisms can significantly impact the performance of bulk internet technologies by limiting the amount of data that can be transmitted within a given time frame. This can result in slower download and upload speeds, increased latency, and overall decreased network efficiency. The implementation of bandwidth throttling can lead to congestion on the network, packet loss, and reduced quality of service for users engaging in activities such as file sharing, video streaming, and online gaming. Additionally, bandwidth throttling can hinder the scalability and reliability of bulk internet technologies, affecting their ability to handle large volumes of data efficiently. Overall, bandwidth throttling mechanisms can have a detrimental effect on the performance and user experience of bulk internet technologies.

Network optimization algorithms play a crucial role in enhancing the efficiency of bulk internet technologies by utilizing advanced techniques such as load balancing, traffic shaping, and packet prioritization. These algorithms help in minimizing latency, maximizing bandwidth utilization, and improving overall network performance. By analyzing network traffic patterns, identifying bottlenecks, and dynamically adjusting routing paths, network optimization algorithms ensure smooth data transmission and seamless connectivity for large-scale internet applications. Additionally, these algorithms enable better resource allocation, reduce network congestion, and enhance the quality of service for users accessing bulk internet technologies. Overall, the implementation of network optimization algorithms significantly contributes to the optimization of network resources and the enhancement of overall system efficiency in handling bulk internet traffic.