TCP/IP utilizes the concept of window size in congestion control mechanisms by adjusting the amount of data that can be sent before receiving an acknowledgment. The window size represents the maximum number of packets that can be sent without waiting for an acknowledgment, helping to optimize network performance and prevent congestion. By dynamically adjusting the window size based on network conditions, TCP/IP can effectively manage data flow and ensure efficient communication between devices.

The TCP congestion window plays a crucial role in regulating data flow in a network by controlling the amount of data that can be transmitted before receiving an acknowledgment. It acts as a buffer that limits the number of unacknowledged packets in the network, preventing congestion and ensuring reliable delivery of data. By adjusting the congestion window size based on network conditions, TCP can effectively manage traffic flow and optimize performance.

Multi-dwelling unit (MDU) residents no longer just expect a roof over their heads; they demand a reliable connected existence. Connectivity is key. The internet isnot only an indispensable utility, but one that MDU residents expect property owners to provide. This post explores why a reliable internet service is crucial for property management and the potential consequences of dead spots, slow speeds, and internet downtime.

Posted by on 2024-02-07

Greetings from the technical forefront of Dojo Networks, your community’s internet service provider. In this article, we embark on a technical journey to explore the intricacies of WiFi connectivity within your apartment complex. As WiFi ninjas, we'll delve into the advanced mechanisms and protocols underpinning our managed network, detail the disruptive influence caused by personal routers, and explain why a unified approach from all residents is essential for ensuring optimal internet performance.

Posted by on 2024-01-18

It’s in our DNA. It made us who we are. DojoNetworks got its start more than 20 years ago as an internet company selling retail direct to MDU residents. We sold against the big carriers… one customer at a time. To win over–and retain–customers who assumed the cable company was their only option, we had to provide better value and better service. No other service provider in our industry, no one, has this amount of direct-to-customer experience or success. The carriers were used to being the only game in town, and the other MSPs all started with bulk, knowing they had a captive audience. A few MSPs are just now starting to offer opt-in service and have a year or two of experience.

Posted by on 2023-10-30

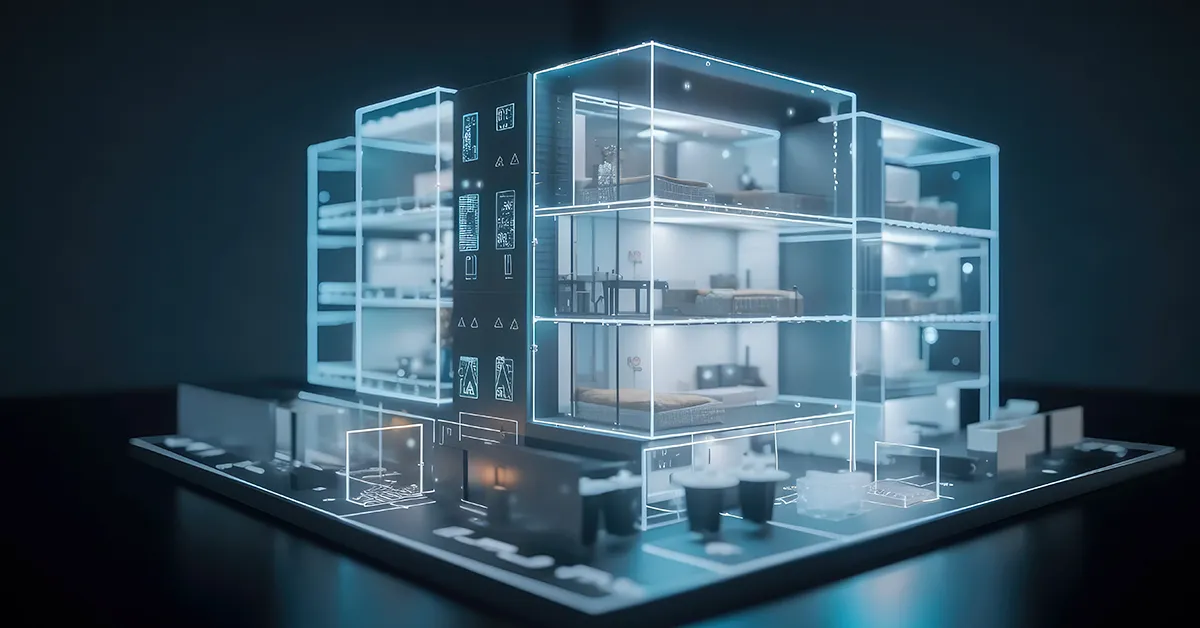

Smart apartment buildings, equipped with cutting-edge technology and automation systems, are becoming the new standard in property management. In this comprehensive guide, we will explore the concept of smart apartment buildings, the benefits they offer to owners and tenants, how to build or upgrade to one, the key features and technologies involved, and the steps to plan and implement a smart apartment building strategy.

Posted by on 2023-09-25

For students and other multi-tenant property residents, high-speed internet service is no longer a luxury. It’s a necessity. Internet access is commonly referred to as the “fourth utility” and is viewed by many to be THE MOST IMPORTANT UTILITY™.

Posted by on 2023-07-20

TCP Reno and TCP Vegas differ in their approaches to congestion control in several ways. TCP Reno uses a fast retransmit mechanism to recover from packet loss, while TCP Vegas focuses on estimating network congestion based on round-trip times. TCP Reno reacts quickly to packet loss by halving the congestion window size, while TCP Vegas uses a more proactive approach to avoid congestion before it occurs. These differences in congestion control algorithms impact how each protocol handles network congestion and optimizes data transmission.

The slow start algorithm in TCP congestion control is significant because it allows TCP to gradually increase the congestion window size to find the optimal transmission rate. By starting with a conservative window size and doubling it after each successful acknowledgment, TCP can efficiently ramp up data transmission without overwhelming the network. This gradual approach helps prevent congestion and ensures stable performance during data transfer.

TCP utilizes the concept of retransmission timeout to manage congestion in a network by retransmitting packets that are not acknowledged within a certain time frame. When a packet is lost or delayed, TCP sets a timeout value to retransmit the packet, preventing network congestion and ensuring reliable data delivery. By dynamically adjusting the retransmission timeout based on network conditions, TCP can effectively handle congestion events and maintain smooth communication between devices.

The use of Explicit Congestion Notification (ECN) in TCP/IP congestion control mechanisms enhances network performance by allowing routers to notify endpoints of congestion before packet loss occurs. ECN enables TCP to react proactively to congestion signals from the network, adjusting the congestion window size to prevent packet loss and optimize data transmission. By incorporating ECN into congestion control algorithms, TCP/IP can improve efficiency and reduce the likelihood of network congestion.

Modern TCP congestion control algorithms like Cubic and BBR improve upon traditional approaches by optimizing data transmission based on network conditions. Cubic uses a cubic function to adjust the congestion window size, providing a more stable and scalable approach to congestion control. BBR, on the other hand, focuses on estimating available bandwidth and round-trip times to optimize data flow. These modern algorithms enhance network performance by adapting to changing conditions and maximizing throughput, leading to more efficient data transmission in complex network environments.

Distributed file systems (DFS) offer numerous advantages in bulk internet technologies. One key benefit is improved scalability, as DFS allows for the storage and retrieval of large amounts of data across multiple servers, enabling efficient handling of high volumes of information. Additionally, DFS enhances fault tolerance by replicating data across different nodes, reducing the risk of data loss in case of hardware failures or network issues. Another advantage is increased performance, as DFS enables parallel processing and load balancing, leading to faster data access and processing speeds. Furthermore, DFS supports data sharing and collaboration among users in a distributed environment, promoting seamless communication and teamwork. Overall, the use of DFS in bulk internet technologies can significantly enhance data management, reliability, and efficiency.

When implementing hybrid cloud content distribution in bulk internet technologies, several considerations must be taken into account. These include factors such as network bandwidth, data security, scalability, latency, and cost-effectiveness. It is important to ensure that the hybrid cloud infrastructure can efficiently handle the distribution of large amounts of content across different networks while maintaining high levels of security to protect sensitive data. Scalability is also crucial to accommodate fluctuations in demand and ensure optimal performance. Additionally, minimizing latency is essential to provide a seamless user experience. Finally, cost-effectiveness should be a priority to maximize the benefits of hybrid cloud content distribution. By carefully addressing these considerations, organizations can effectively leverage hybrid cloud technologies for bulk internet content distribution.

The Internet Cache Protocol (ICP) plays a crucial role in bulk internet technologies by facilitating the efficient sharing of cached web content between proxy servers. By utilizing ICP, proxy servers can quickly determine whether a requested web page is already stored in a nearby cache, reducing the need to retrieve the content from the original server. This helps to improve overall network performance, decrease latency, and minimize bandwidth usage. Additionally, ICP enables the creation of hierarchical cache systems, where multiple proxy servers can collaborate to optimize content delivery. Overall, ICP enhances the scalability and reliability of internet caching systems, making them essential components of modern internet infrastructure.

Mitigating DDoS attacks in bulk internet technologies can be achieved through a variety of strategies. Implementing rate limiting, traffic filtering, and access control lists can help to reduce the impact of such attacks. Utilizing a content delivery network (CDN) can also distribute traffic across multiple servers, making it more difficult for attackers to overwhelm a single target. Additionally, deploying intrusion detection and prevention systems (IDPS) can help to identify and block malicious traffic in real-time. Regularly updating and patching software and systems can also help to prevent vulnerabilities that attackers may exploit. Collaborating with internet service providers (ISPs) and utilizing cloud-based DDoS protection services can provide additional layers of defense against these types of attacks. By employing a combination of these strategies, organizations can better protect their networks and mitigate the risk of DDoS attacks in bulk internet technologies.

Reverse proxy servers offer numerous benefits in bulk internet technologies. By utilizing reverse proxies, companies can improve security by hiding the origin server's IP address and protecting it from potential cyber attacks. Additionally, reverse proxies can enhance performance by caching frequently accessed content and reducing the load on the origin server. This can lead to faster response times and improved user experience. Furthermore, reverse proxies can help with scalability by distributing incoming traffic across multiple servers, ensuring that the network can handle high volumes of requests. Overall, the use of reverse proxy servers in bulk internet technologies can result in increased security, improved performance, and enhanced scalability for businesses.